This adapter is tested with the YOLOv3 Pytorch and YOLOv5 Pytorch repositories. Bounding boxes are normalized between [0,1]. Images should be in a separate folder (named e.g. images/). Annotations should be in a separate folder (named e.g. labels/) and must have the same file name as corresponding image source file but with the ending .txt. The categories.txt file in the parent folder list all category labels which position (index) is used to label the bounding box class (see Annotation Format paragraph below). Below is an example structure:

categories.txt

images/

image1.jpg

labels/

image1.txtSupported annotations:

- rectangle

The bounding box values are normalized between 0 and 1.

- Normalization Formula:

x / image_widthory / image height - Normalization Formula:

x * image_widthory * image height

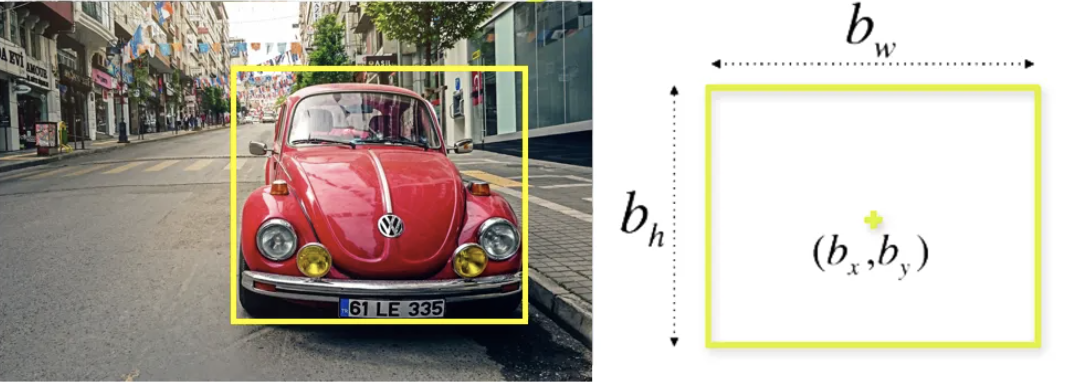

A bounding box has the following 4 parameters:

- Center X (bx)

- Center Y (by)

- Width (bw)

- Height (bh)

Each annotation file (e.g. image1.txt) is a space separated CSV file where every row represents a bounding box in the image.

A row has the following format:

<class_number> <center_x> <center_y> <width> <height>

- class_number: The index of the class as listed in categories.txt

- center_x: The normalized bounding box center x value

- center_y: The normalized bounding box center y value

- width: The normalized bounding box width value

- height: The normalized bounding box height value

The adapter has the following parameters:

--path: the path to the base folder containing the annotations (e.g.: data/object_detection/my_collection)--categories_file_name: tThe path to the categories file if not set, default to categories.txt--images_folder_name: the name of the folder containing the image files, if not set, default to images--annotations_folder_name: The name of the folder containing the image annotations, if not set, default to labels